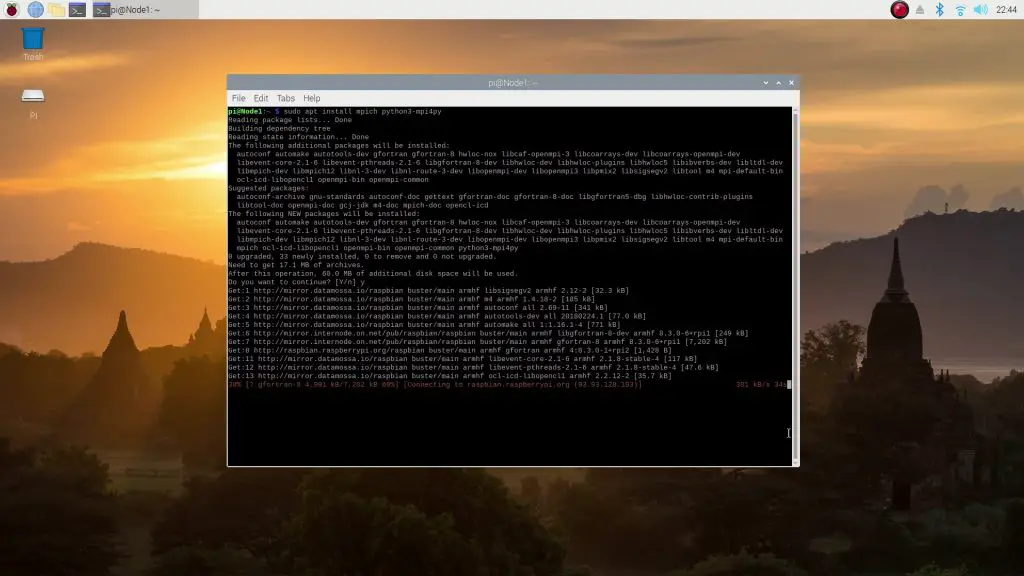

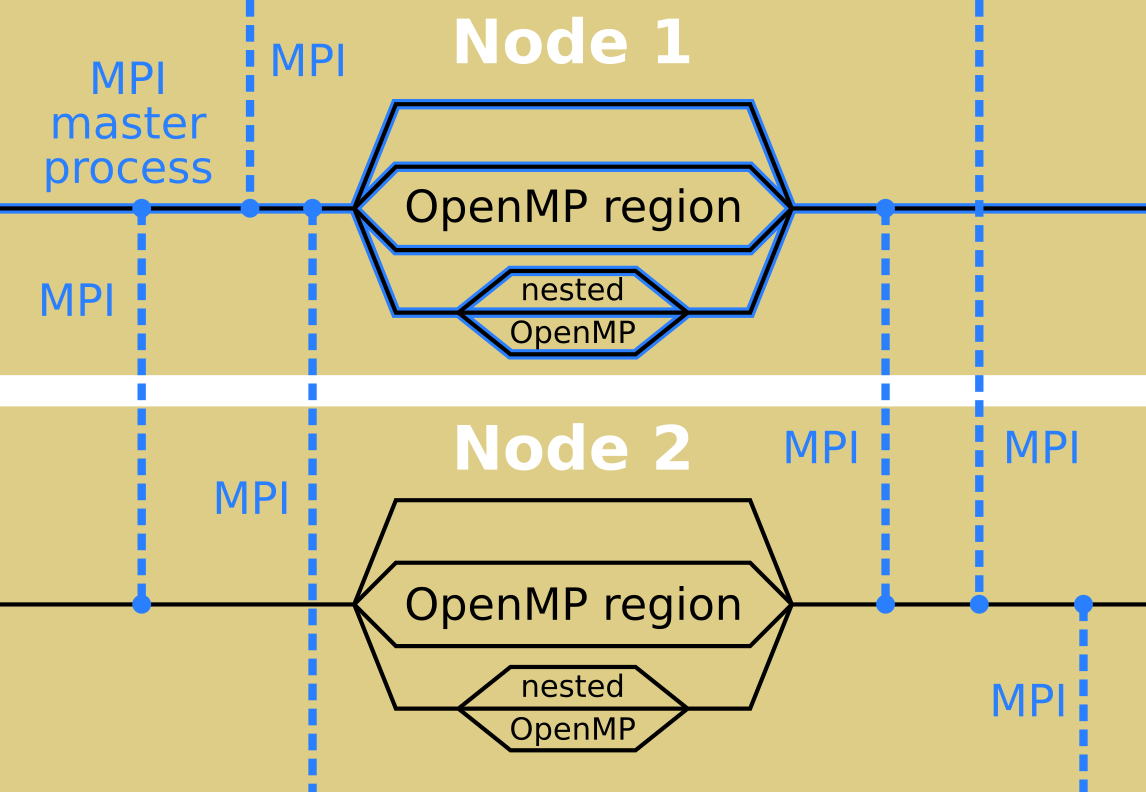

It takes care of starting multiple instances of myapp and distributes these instances across the nodes in a cluster as shown in the picture below. The MPI launcher mpirun is used to start myapp.

This program can be compiled and linked with the compiler wrappers provided by the MPI implementation.

#Install mpi on node free#

* Finalize the MPI library to free resources acquired by it. MPI_Recv(message, 20, MPI_CHAR, 0, tag, MPI_COMM_WORLD, &status) * Receive a message with a maximum length of 20 characters from process MPI_Send(message, strlen(message)+1, MPI_CHAR, 1, tag, MPI_COMM_WORLD) * Send the message "Hello, there" from the process with rank 0 to the * Determine unique id of the calling process of all processes participating Note that in MPI a process is usually called a “rank”, as indicated by the call to MPI_Comm_rank() below.

#Install mpi on node code#

The example below shows the source code of a very simple MPI program in C which sends the message “Hello, there” from process 0 to process 1. The MPI standard defines a message-passing API which covers point-to-point messages as well as collective operations like reductions. I will be presenting a talk on CUDA-Aware MPI at the GPU Technology Conference next Wednesday at 4:00 pm in room 230C, so come check it out! A Very Brief Introduction to MPIīefore I explain what CUDA-aware MPI is all about, let’s quickly introduce MPI for readers who are not familiar with it. The processes involved in an MPI program have private address spaces, which allows an MPI program to run on a system with a distributed memory space, such as a cluster. In this post I will explain how CUDA-aware MPI works, why it is efficient, and how you can use it. With CUDA-aware MPI these goals can be achieved easily and efficiently. Another reason is to accelerate an existing MPI application with GPUs or to enable an existing single-node multi-GPU application to scale across multiple nodes. A common reason is to enable solving problems with a data size too large to fit into the memory of a single GPU, or that would require an unreasonably long compute time on a single node. There are many reasons for wanting to combine the two parallel programming approaches of MPI and CUDA. As such, MPI is fully compatible with CUDA, which is designed for parallel computing on a single computer or node. MPI, the Message Passing Interface, is a standard API for communicating data via messages between distributed processes that is commonly used in HPC to build applications that can scale to multi-node computer clusters.

0 kommentar(er)

0 kommentar(er)